DR. MICHAEL NEBELING

I'm an Associate Professor at the University of Michigan, where I direct the Information Interaction Lab. I'm interested in designing and studying novel methods and tools to create next-generation user interfaces. My focus over the last few years has been on augmented, virtual, and mixed reality (XR) experiences, with the goal of making them easy, safe, and beneficial to use. My research contributions often involve designing and building new toolkits, authoring tools, and interaction techniques, in an effort to balance usability, accessibility, and privacy needs.

At UM, I lead the Information Interaction Lab in the School of Information (UMSI). I've also been involved with the Center for Academic Innovation where I created the Extended Reality for Everybody specialization on Coursera (see below). I'm faculty adviser to the student-led Alternate Reality Initiative (ARI). I also co-direct our HCI seminar series as part of the Michigan Interactive and Social Computing (MISC) research group, teach introductory and advanced AR/VR courses (SI 559/659), and lead or contribute to several UM and externally funded research projects.

I regularly serve on the program and organizational committees of the ACM CHI, UIST, and EICS conferences. For example, I am going to be UIST 2026 general co-chair with my colleague and friend, Dr. Steve Oney, and CHI 2026 subcommittee co-chair with Dr. Hasti Seifi. Previously, I was EICS 2024 general co-chair, UIST 2021 Papers co-chair, and EICS 2015 papers co-chair. I received a Disney Research Faculty Award (for excellence in science), a Mozilla Research Award (for reinventing the browser as an AR/VR application delivery platform), an Epic MegaGrant (for educational tools in XR), and a Meta Reality Labs gift (for my work on fairness and inclusion in XR). Working with UM's Center for Academic Innovation, I started my role as XR Faculty Innovator-in-Residence with the UM wide XR Initiative in 2019. I created the XR MOOC series, a three-course AR/VR specialization on Coursera. I joined UM in 2016 after completing a postdoc in the Human-Computer Interaction Institute at Carnegie Mellon University and a PhD in the Department of Computer Science at ETH Zurich. Upon my promotion to Associate Professor in 2022, I spent my sabbatical at Meta Reality Labs‐Research.

Why XR Research Matters

As an HCI researcher, I've become interested in XR in 2016 when I started my new lab at the University of Michigan. I want AR/VR to become useful and effective interaction modes that bring real benefits to users taking into account their context, task, abilities (or disabilities), and preference. In terms of application domains, I'm particularly interested in the role XR technologies can play to improve education and accessibility. Read more...

Personally, I found it quite hard to get started in XR and did everything in a learning by doing style. Seeing how my students and I struggled, my mission initially was to lower the barrier to entry in an effort to democratize AR/VR research and design. For a while, I've used the tools and toolkits I created in research to support my own teaching, but it has become hard to maintain them and so I mostly just teach the same principles and methods but with whatever the latest tools are. For a few years, I've taught rapid prototyping for XR courses with colleagues and friends, and it's been fun! Since then, I've branched out and worked on all kinds of XR issues (there are so many!).

I've explored both the positive and negative sides of XR. For example, I investigated how XR can support rapid prototyping of novel design concepts [CHI'18a, CHI'19a, CHI'20a], improve instructional experiences [CHI'20b, CHI'21, CHI'22a], make physical environments more inclusive [CHI Play'19, CHI'24W], ensure safer use of XR particularly in social settings [CHI'23, UIST'23], and tell sensitive narratives without overstimulation [DIS'22]. While I'm an enthusiast and see a bright future for XR, in particular AR, I've also looked at the key barriers to entry and major challenges in XR design [CHI'20c, CHI'22b] as well as responsible design for XR [CHI'20W, CSCW'20W, CHI'22W] and how XR could be accidentally or intentionally misused, such as through deceptive design patterns which could lead to more severe outcomes given the immersive qualities of XR [TOCHI'24].

I like reflecting on the role and impact of research and often debate the challenges of working in certain areas or using particular methods. For example, in Playing the Tricky Game of Toolkits Research I discuss the issues I faced finding my research community during my doctoral studies at ETH Zurich. I also like complaining a lot about XR methods and tools (I consider it part of my job :P). For example, in The Trouble with Augmented Reality/Virtual Reality Authoring Tools I do just that. While I like to complain, I also try to help pave a way forward. For example, in XR Tools and Where They Are Taking Us I've analyzed a decade of research on AR/VR tools and identified what I called forward vectors for the research community to pursue.

Extended Reality for Everybody (2020)

Below is one of my video lectures from the Extended Reality for Everybody specialization on Coursera. In this short video, I explain the key concepts and terminology in the XR space. It is an example of a video lecture we created in two versions, first using traditional video and then using these more immersive formats. It is also an example of a simple form of virtual production which provided a basis for our XRStudio research project (see below).

PhD in Technical HCI Office Hour (2024)

I'm always interested in talking to students about doing a PhD in technical HCI. Below is a video of my online office hour with several faculty friends, going through a list of crowdsourced questions that

I received from prospective PhD students. I've met a lot of great students this way and really enjoyed this. This year was the 6th edition. You can find the ones from 2019‐2023 in my YouTube playlist.

Research at the University of Michigan (2020-today)

Since starting the Information Interaction Lab in September 2016, I have developed a new research focus on AR/VR interfaces. My earlier XR research contributed new techniques, tools, and technologies to make AR/VR interface design and development easier and faster. My vision was that anyone without 3D modeling, animation, or programming background can be an active participant in AR/VR design. My initial focus was on rapid prototyping, but I have since expanded scope to promote more accessible and safer XR design.

For example, below is a video of Reframe, an AR storyboarding tool prompting interaction designers to address S&P issues by design. This project was led by my PhD student Shwetha Rajaram and presented at UIST 2023.

Research at the University of Michigan (2016-2020)

- MRAT (CHI'20 paper): a system to collect user interaction data of AR/VR users and visualize it in-situ using mixed reality visualizations

- XRDirector (CHI'20 paper): an collaborative immersive authoring system that adapts roles from filmmaking for virtual production of 3D and AR/VR scenes with interactive content

- 360proto (CHI'19 paper): a 360 paper prototyping tool to rapidly create AR/VR prototypes from paper and bring them to life on AR/VR devices based on a set of emerging paper prototyping templates specifically for AR/VR immersive environments, AR marker overlays and face masks, VR controller models and menus, and 2D screens and HUDs

- ProtoAR (CHI'18 paper): a rapid physical-digital prototyping tool for mobile augmented reality apps that enables rapid transition from physical prototyping using paper wireframes and Play-doh models to digital prototyping based on multi-layer cross-device authoring and interactive capture tools

- XD-AR (EICS'18 paper): an augmented reality platform that we are developing for multi-user multi-device augmented reality experiences that allow users to edit the physical world in realtime

- 360Anywhere (EICS'18 paper): a platform enabling multi-user collaboration scenarios using augmented reality projections of 360 video streams

Please find out more on the Information Interaction Lab web site.

Research at Carnegie Mellon University (2015-2016)

Using my Swiss NSF mobility grant at CMU, I investigated ways of orchestrating multiple devices and crowds to enable complex information seeking, sensemaking and productivity tasks on small mobile and wearable devices. I also contributed to the Google IoT project led by CMU. This work led to three papers at ACM CHI 2016:

- WearWrite (CHI'16 paper, CHI'16 talk, UIST'15 demo, tech report on arXiv): a wearable interface enabling smartwatch users to orchestrate crowd workers on more powerful devices to complete writing tasks on their behalf

- XDBrowser (CHI'16 paper, CHI'16 talk, CHI'17 paper, CHI'17 talk): a new cross-device web browser that I used to elicit 144 multi-device web page designs for five popular web interfaces leading to seven cross-device web page design patterns

- Snap-To-It (CHI'16 paper): a mobile app allowing users to opportunistically interact with appliances in multi-device environments simply by taking a picture of them

Research at ETH Zurich (2009-2015)

Beyond Responsive Design

- XCML (WWW journal article): a domain-specific language that tightly integrates context-aware concepts and multi-dimensional adaptivity mechanisms using context matching expressions based on a formally-defined context algebra

- jQMetrics (CHI'11 paper, jQMetrics on GitHub): an evaluation tool for web developers and designers to analyze web page layout and perform measurements along a set of empirically derived metrics

Adaptation to Touch and Multi-touch

- jQMultiTouch (EICS'12 paper, jQMultiTouch on GitHub): jQuery-like toolkit and rapid prototyping framework for multi-device/multi-touch web interfaces providing a unified method for the specification of gesture-based multi-touch interactions

- W3Touch (CHI'13 paper): an evaluation tool for web designers to assess the usability of web interfaces on touch devices as well as a toolkit for automating the adaptation process to different touch device contexts based on simple usability metrics

Crowdsourcing Design and Evaluation

- CrowdAdapt (EICS'13 paper): a web site plugin for crowdsourcing adaptations for additional viewing conditions not considered in the original design

- CrowdStudy (EICS'13 paper): a toolkit and general framework for recruiting crowds as test users and guiding them through asynchronous remote web site usability evaluations

User-Defined Multimodal Interactions

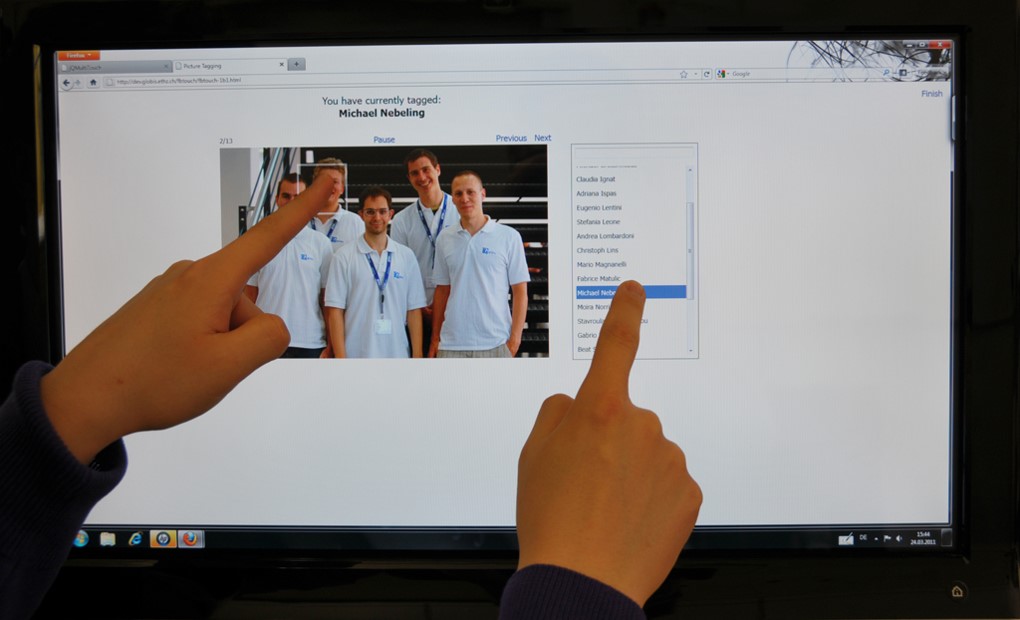

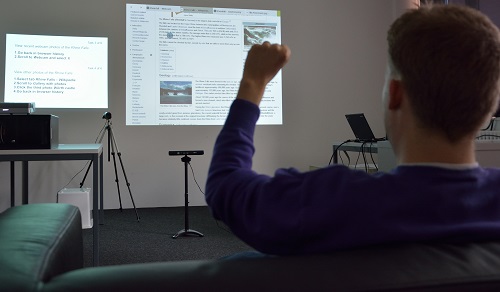

- Kinect Browser (ITS'14 paper): a new multimodal web browsing system designed based on a replication of Morris's Web on the Wall guessability study

- Kinect Analysis (EICS'15 paper): both a method and a tool for recording, managing, visualizing, analyzing and sharing user-defined multimodal interaction sets based on Kinect

Cross-Device Interfaces

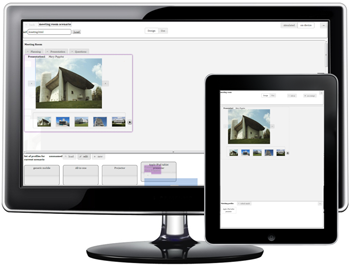

- MultiMasher (WISE'14 paper): a rapid prototyping tool for cross-device applications supporting mashing up multiple web applications and devices and integrating them into a new cross-device user experience

- XDStudio (CHI'14 paper): an authoring environment for cross-device user interfaces supporting interactive development on multiple devices based on a combination of simulated authoring and on-device authoring

- XDKinect (EICS'14 paper): a toolkit for developing cross-device user interfaces and interactions using Kinect to mediate interactions between multiple devices and users

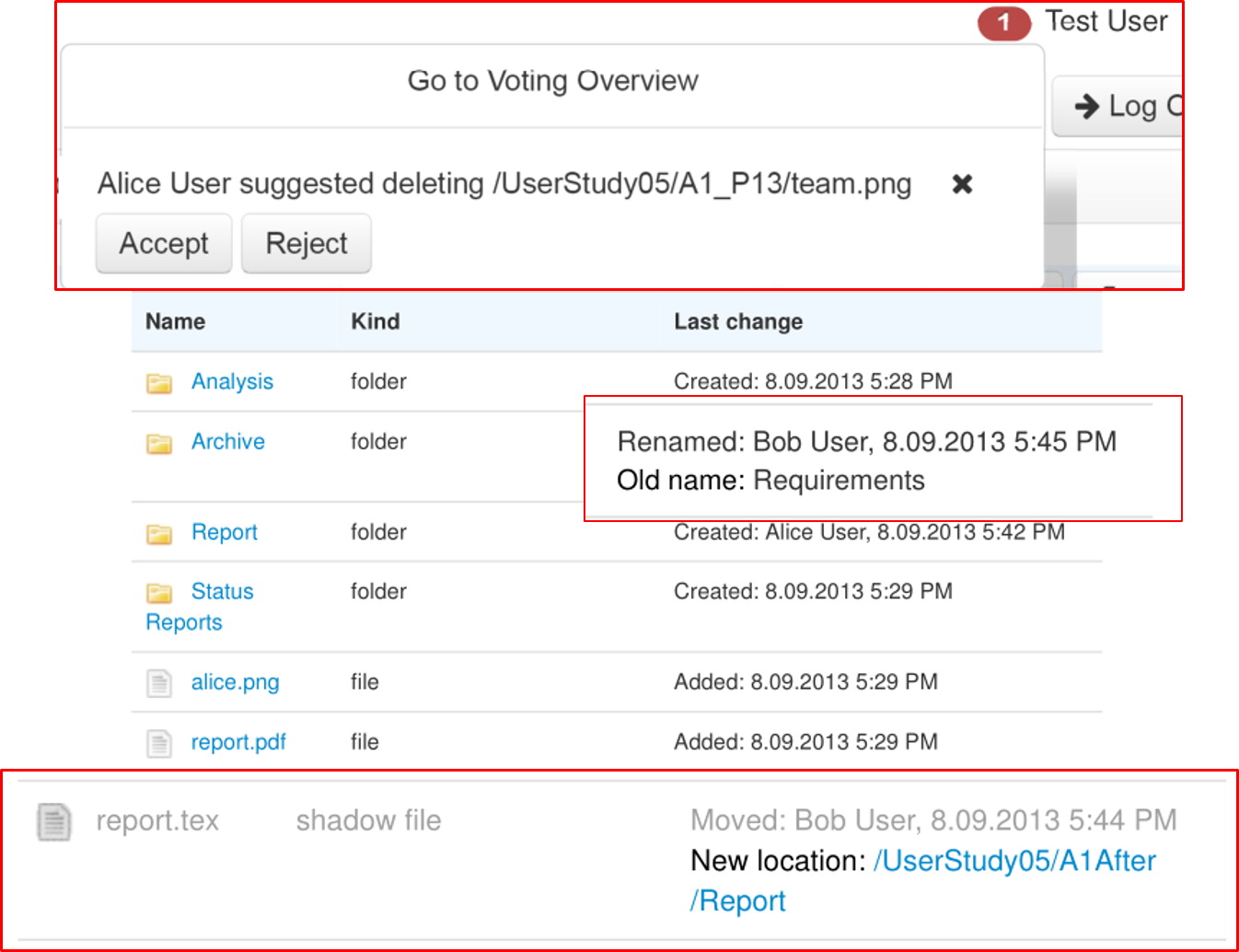

Personal Information Management and Cloud Storage

- PIM Light (AVI'14 paper): a study on Adobe Photoshop Lightroom developing the process of engineering personal information management tools by example

- MUBox (CHI'15 paper): a system introducing multi-user aware extensions for working in teams on top of personal cloud storage solutions such as Dropbox

Selected Publications

For the full list of publications, please check Google Scholar and DBLP.

Students supervised by me are underlined.

Contact

You can reach me by email via nebeling@umich.edu.

You can also find me on Twitter @michinebeling.

News and Events

My PhD student, Shwetha Rajaram, and postdoctoral fellow, Dr. Janet Johnson will be on the job market. Please give them your full consideration; they are both awesome!

2025

• Did a big joint project in SI 659 Developing AR/VR Experiences focused on XR safety training for journalists. Difficult topic but very exciting to work on it with two of our Knight-Wallace fellows.

• My current and former students got a few papers in at CHI 2025, yay! Some even with awards. Proud papa!

• Going to attend CHI in April. First time to Japan! Hajime!

• Moving to our new building, yay!

• Good news! The XR Graduate Certificate will reboot in Fall 2025.

• Probably a few other things happened that I currently don't remember :P

Fall 2024

• Teaching two sections of SI 559 Intro to AR/VR Application Design.

• Contributed to our Innovation Summit focused on the role of XR and GenAI in residential and online learning, spearheaded by the Center for Academic Innovation.

Winter 2024

• Teaching SI 659 Developing AR/VR Experiences. Made it a bit less broad by focusing on Unity as opposed to covering WebXR, Unity, and Unreal. Seeing some good projects this semester!

• Doing some really cool research focused on future always-on, head-worn AR; bringing AI agents into MR; and making AR/VR safer with GenAI.

• Submitted a few papers to UIST 2024 after the CHI 2024 disaster :P

Fall 2023

• Back to Michigan. Welcoming postdoc Janet Johnson in the lab. Continuing work with PhD student Shwetha Rajaram. Teaching SI 559 Intro to AR/VR. All the good stuff.

• Latest edition of our PhD in technical HCI office hour.

2022/2023

• On sabbatical at Meta Reality Labs Research in Redmond.

Fall 2022

• Going to do another PhD in technical HCI office hour in October/November.

• Attending UIST 2022 in Bent, Oregon.

• Bought a few tickets to CHI 2023 in Germany :)

August 2022

• Shwetha Rajaram finished an important PhD milestone and is now a PhD candidate. Congrats!

• Starting my sabbatical at Meta Reality Labs Research in Redmond.

Summer 2022 Hosting Veronika Krauss from Germany and Vittoria Frau from Italy in my lab. Going kayaking!

May 2022 Received tenure effective September 2022. Yay! :)

Winter 2022

• Held three office hours for my XR MOOC Specialization on Coursera.

• Gave a Autodesk Research HCI and Visualization Speaker Series talk and CMU HCII Seminar talk on empowering novice XR designers.

Fall 2021

• Co-chaired UIST 2021 with Ranjitha Kumar and Jeff Nichols.

• Co-hosted our XR social meet-up at UIST 2021 with Pedro Lopes and David Lindlbauer!

• Gave a Stanford HCI Seminar talk on empowering novice XR designers.

• Gave a guest lecture on Extended Reality to 100+ police officers in INTERPOL Innovation Center's Virtual Discussion Room series.

• Co-taught our XR prototyping course with Mark Billinghurst at ISMAR 2021 and SIGGRAPH Asia 2021.

• My PhD student, Shwetha Rajaram, defended her pre-candidacy milestone!

• Submited 5 papers, 2 workshops, and 1 course proposal to CHI 2022. Thanks to my co-authors!

Summer 2021

• Participated in our Research Experience for Master's Students (REMS) program for the fourth time.

• Participated in a think tank event organized by Michigan Medicine and Microsoft.

• Co-hosted our UIST 2021 program committee meeting with almost 80 Associate Chairs!

• Gave two lectures on HCI and CSCW in our annual Human Factors Engineering summer course.

CHI 2021

• Co-taught a course on XR prototyping with Mark Billinghurst. We'll repeat it at ISMAR 2021 and SIGGRAPH Asia.

• Co-presented XRStudio paper with my students.

• Spontaneously organized an CHI @ XR meet-up with more than 100 people. Let's do it again!

Spring 2021

• Submitted my initial tenure materials. Woohoo!

• 360theater with Max and Katy accepted to EICS 2021.

• Received an Epic MegaGrant to support my work on using XR for virtual production of immersive lecture materials. So cool!

2019

December

• XR MOOC series launched on Coursera!

• CHI 2021 course on rapid prototyping of XR experiences with Mark Billinghurst accepted. It'll feature the techniques I teach in my XR MOOC series!

• CHI 2021 paper (conditionally accepted) on XRStudio, a live virtual production system for streaming immersive instructional experiences where the instructor is in VR and students see the lecture in MR).

• Enjoyed giving a guest talk at Snap Research hosted by Andrés Monroy-Hernández.

November Enjoyed participating in Albrecht Schmidt's and Katrin Wolf's Conversations in Mixed Reality!

UIST 2020 Nice to catch up with many friends at the first virtual UIST. Going to be UIST 2021 Papers Co-chair with Ranjitha Kumar. Fun!

CSCW 2020 Co-organized our workshop on the future of social AR with guest talks from Julian Bleecker and Jaron Lanier. Yeah!

CHI 2020 Co-organized our workshop on ethics of mixed reality. Also published pages on our MRAT and XRDirector projects.

April Attended XR Midwest and joined a talk with Jeremy Nelson on teaching and learning with XR at scale. Thanks to the organizers from ARI!

March MRAT and a study on key barriers to entry for novice AR/VR creators I co-authored with friends from Canada both won a Best Paper Award at CHI. Now you're cooking!

February Interviewed many great prospective PhD students. Made two offers. Can't wait to start working with them!

January Started producing my XR MOOC slated for launch in 2020. I'm very excited to share my experience from my four years of research and teaching in AR/VR at UM.

2019

December

• Three papers conditionally accepted to CHI 2020:

XRDirector (a role-based collaborative immersive authoring tool),

MRAT (mixed reality analytics toolkit),

and an interview study with 21 participants on key barriers for AR/VR creators. Aloha!

• Selected as a finalist for MTRAC funding, Michigan's translational research grant, to take some of my AR/VR innovations and create an AR/VR design platform.

November

• Twitch Office Hours: Doing a PhD in technical HCI (ask

your questions here)

• MIT HCI Seminar talk: Prototyping Mixed Reality experiences.

• MUM conference keynote: Making Mixed Reality a thing that designers do and users want.

October

• Won't make it to UIST :-(

• Participating as one of only eight selected teams for UM's Celebrate Invention

event, showcasing 360proto (will be released soon!) and other technologies created in my lab.

• Won Best Paper Award at CHI Play for our iGYM project with Roland Graf.

September Aloha, CHI 2020! Apart from a few paper submissions, I also community-sourced two Spotify playlists: one for authors, and one for reviewers. Check them out, they are really great!

August Went kayaking with the lab! :-)

July

• Attending SIGGRAPH 2019, my first! Happy to receive the Disney Research|Studios Award.

Thanks to Markus Gross and Bob Sumner for the nice little ceremony!

• Had a great time at the Microsoft Research Faculty Summit! Thanks to Jaime Teevan for inviting me.

• Gave a talk at Adobe Research on Mixed Reality Prototyping. Got very useful feedback! Thanks to my host, Wilmot Li.

June

• iGYM paper accepted to CHI PLAY 2019, wohoo team!

• Invited to give the opening keynote at MUM 2019.

• Attended the UIST 2019 PC. That was a lot of fun! All my papers got rejected :P

May

• Had a great trip to Seattle visiting friends at UW CSE, iSchool, and HCDE! Gave a DUB

seminar on Prototyping Mixed Reality Experiences.

• Also met UW Reality Lab folks.

• Attended CHI 2019 in Glasgow! Held my CHI 2019 AR/VR prototyping course.

April UIST submissions! Finished first time teaching my more technical AR/VR course. Had a lot of fun with A-Frame and Unity!

January Got a Disney Research Faculty Award. This award will enable me to implement and test my XR workbench concept. I'm excited!

2018

December Two papers conditionally accepted to CHI 2019: one on my 360proto tool for making AR/VR interfaces from paper and the other is a conceptual framework for classifying mixed reality experiences developed based on expert interviews and a literature survey, led by my former postdoc, Max Speicher. Also, my CHI 2019 AR/VR prototyping course was accepted.

November Got a Mozilla Research Grant for a next-generation AR/VR browser. This award will support several new students joining my lab next semester! Joined UIST 2019 PC. Looking forward to visiting Stanford next summer and to your submissions!

October Attending parts of UIST, AWE, and ISMAR in Germany! Walter Lasecki is going to present our paper on Arboretum at UIST and I'll present The Trouble with AR/VR Authoring Tools at ISMAR.

September New semester starting. Teaching interaction design and my new AR/VR course! Joined the CHI 2019 PC on the Engineering Interactive Systems & Technologies subcommittee.

August Workshop paper The Trouble with AR/VR Authoring Tools accepted at ISMAR 2018.

July Became EICS 2019 Technical Chair. Max Speicher completed his postdoc in my lab. Good luck for the future!

June Visiting ETH Zurich and giving a talk on our AR research. Attending UIST 2018 PC meeting. Learned a lot, and had some fun. XDBrowser related paper for improving web accessibility got in. Brian presenting XD-AR and 360Anywhere at EICS 2018.

May Visiting Disney Studios! Also visiting Disney Research LA and giving a talk on our AR research. 360Anywhere accepted at EICS 2018.

April Wrapping up UIST 2018 submissions. Attending CHI 2018. XD-AR accepted at EICS 2018.

March Giving an Ignite UX talk on ProtoAR. Launching my AR Teach-Out. Giving a mini lecture on AR/VR to our incoming MSI students. Rob and Josh will join me as PhD students! Welcome to UM!

February Visiting Oculus Research (now Facebook Reality Labs) and giving a talk on our AR research. Also, excited to join the UIST 2018 PC!

January Two AR/VR course proposals accepted! Going to start teaching the AR/VR intro course in Fall 2018. Also, started filming our AR Teach-Out. Going to launch in March.

2017

December Three papers accepted at CHI 2018! New grants from Ford and Masco around AR/VR projects.

November Started developing two new courses on Augmented/Virtual/Mixed Reality. There was also a first article about it in the Michigan Record.

October My proposal for an AR Teach-Out accepted. Looking forward to producing it over the next few months! I was also on the PhD thesis committee of Maria Husmann at ETH Zurich. Excellent cross-device research!

September In a great team effort, my lab submitted six papers to CHI 2018. Thanks everyone! Here's a showreel of our summer research. I'm very proud of this work with my students.

August Became a Co-PI in a Lenovo sponsored project around AR interfaces.

June Getting ready for my four summer interns. And, back on the CHI Engineering PC for 2018. Looking forward to your submissions!

May CHI 2017 in Denver with Max, Brian, and former CMU colleague, Nikola Banovic. LOL! Presented XDBrowser 2.0 and my #HCI.Tools position paper on Playing the Tricky Game of Toolkits Research.

March/April Max Speicher joining my lab as Research Fellow, Brian Hall as PhD Student, and Erin McAweeney as REMS Fellow. Exciting! Also won an ESSI grant!

February Invited to the #HCI.Tools workshop at CHI for my position paper Playing the Tricky Game of Toolkits Research.

January Teaching SI 582 Intro to Interaction Design this spring.

2016

December XDBrowser 2.0 accepted at CHI 2017.

November Giving XDUI tutorial and attending RoomAlive toolkit tutorial at ISS'16. Meeting my former ETH Zurich colleagues Fabrice Matulic and Maria Husmann. A lot of fun!

September Starting as Assistant Professor at UMSI. Setting up the Information Interaction Lab. Teaching SI 482 Interaction Design Studio. A first!

August Wrapping up my Swiss NSF Advanced.Postdoc Mobility fellowship at CMU. Had a great time. Many new friends for life. Thanks for the support!

June Organizing the EICS'16 XDUI workshop (proposal). XDUI tutorial accepted at ISS'16.

May Accepted Assistant Professor position at UMSI. Attended CHI'16 in San Jose, CA. Participated in microproductivity and cross-surface workshops. Led Systems@CHI lunch. Chaired end-user programming session. Presented WearWrite (video) and XDBrowser (video).

January-May Invited talks at TU Munich, Yale, Virginia Tech, Indiana-Purdue, UMSI, UW CSE (video), Adobe Research, and Google.

April Joined HCOMP'16 PC.

March Bluewave accepted at EICS'16.

January Accepted at CHI'16 microproductivity and cross-surface workshops.

2015

December Attended CHI'16 PC meeting in San Jose, CA. Three papers accepted: WearWrite, XDBrowser, Snap-To-It. Also received a new Swiss NSF research grant.

November Attended UIST'15 in Charlotte, NC. Presented WearWrite demo.

October Joined EICS'16 PC.

September CHI'16!!!

August WearWrite demo accepted at UIST'15. Joined IUI'16 and AVI'16 PCs.

July Published WearWrite tech report.

June Co-chaired EICS'15 and co-organized XDUI workshop. Joined CHI'16 PC.

May Attended CHI'15. Received Honorable Mention for MUBox. Participated in mobile collocated interactions workshop and presented my SNSF project. Chaired session on multi-device interaction.

March Kinect Analysis and XDSession accepted at EICS'15.

February Started working at CMU HCII.

Acknowledgments

My work at Michigan is primarily situated in the School of Information. I frequently collaborate with colleagues in the Center for Academic Innovation. My lab has received gifts and in-kind support from Disney, Epic, Meta, Microsoft, Google, and Lenovo.